How desktop and GPU virtualisation power up automotive innovation

Bertrand Boisseau

on 17 October 2022

Tags: Automotive

Autonomous vehicles are all over the media these days. But what of the technologies that make them possible? In a previous blog post, we covered the many fascinating use cases for digital twins and their applications for the development of self-driving cars. But with the race towards autonomy becoming fiercer, the costs to use these new enabling technologies are rising exponentially. Moreover, the need for talent and experts across the world is forcing companies to shift to remote work. You’ve probably heard of virtual desktop infrastructures (VDI) and vGPUs (virtual GPUs), but why would you need one and how could they help your company?

Setting the stage for autonomous vehicle validation

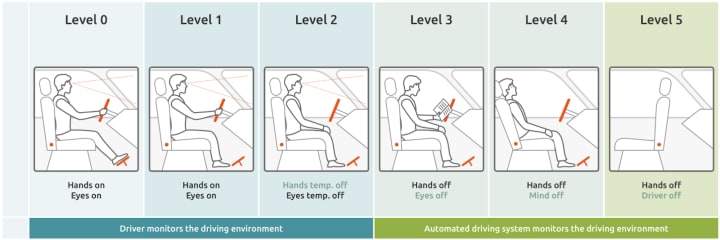

Let’s start with a bit of context. In order to validate autonomous driving (AD) and ADAS systems, algorithms have to go through intense simulations. These simulations require building virtual scenes that replicate very realistic environments where driving scenarios can be tested. Take Bob. Bob works for a major OEM and is responsible for the development of an active safety system which is aiming for level 3 AD. According to the SAE, level 3 of autonomy means that the driver can keep his hands off of the wheel and eyes off of the road in certain situations (see the different levels below). We’ll follow Bob as he goes through the different stages of development and validation.

Validating AD vehicles requires millions of miles to be driven. It’s true that road testing is critical, but it has major caveats. First of all, it costs a lot to have a fleet of multiple vehicles driving on the road (millions of euros per year). The second and most important caveat is that it’s close to impossible to encounter the millions of possible scenarios that could occur in real life. Moreover, if you experience a given scenario on the road – how do you then replicate it? The solution is to run simulations using algorithms for evaluation. And that’s exactly what our friend Bob plans to do.

Early work on simulations kicks off

Bob is going to simulate AD vehicle algorithms in a realistic 3D environment. He has great ideas and starts working on the project on his laptop which uses a regular CPU. He uses Ubuntu Desktop because it gives him access to supported plug-and-play AI/ML stacks which include all of the tools that are required for training his algorithms like TensorFlow or PyTorch.

Unfortunately, his 3D models require more graphical performance and he falls short. The 3D environment and road network are displayed properly but the fusion of simulated sensor data and perception is slowing the whole process down. On top of that, Bob wants the perceived sensor data to be displayed in the same view along with a decision tree that computes what the vehicle sees to determine its next actions.

GPUs add much-needed power but scalability issues arise

The first obvious fix is to equip Bob’s laptop with a GPU, which is what he does. It’s a pretty expensive one for that matter. In the meantime, Bob’s project is gaining traction and his company has hired additional developers to work on the project with him. Bob decides to use Landscape in order to ensure that all of the team’s machines are using the same software tools, versions and patches. This enables the team to have their own software repository.

The latest deliverable provides realistic vehicle damage estimates and the required graphical resources are growing. Buying a more powerful desktop-class GPU won’t cut it, plus that would need to be done for each machine the development team uses. This doesn’t scale very well.

On top of that, Bob’s team is planning on adding computational fluid dynamics models to the vehicle simulation. This requirement has been added by another team in the company that wants to understand the reliability of specific components under intense conditions. While not the most power intensive, these computations add latency to the overall simulation.

Enter VDIs

After discussing with the team, Bob has decided to build a cloud and to run VDIs. Bob’s team chose to use Ubuntu Server because of the long term support and maintenance that comes with all packages and tools. Plus they learned that 65% of all workloads in public clouds run on Ubuntu Server. Now, thanks to VDI, the team can access their virtual workspaces from anywhere in the world and on any device.

Things are looking better; his team can work remotely. They can pass through GPU devices to VMs. All of the scenarios are running smoothly. The team decides to add pedestrians, animals and complex traffic elements to the environment.

The team received specific issues from a road testing team related to complex situations that they encountered under specific circumstances. After going through the issue details, it appears that the algorithms don’t behave correctly when the weather conditions are rough and when lighting generates located glares in front of certain sensors.

Bob decides to add weather conditions to their simulation as well as sun and light elements. This allows them to observe how sun angle changes and light reflections on all surrounding elements like buildings, vehicles and water puddles influence vehicle responses. Moreover, the team wants to provide even more realistic physical simulations capable of predicting precise objects’ paths.

The required resources for the simulations are rising and so are costs. Although Bob’s management is excited about the team’s ambitions, they also want to stabilise costs. To manage the budget, Bob is now asked to consider descoping the features. Before removing features, Bob and the team decide to get together and identify options to optimise resources.

It turns out that by passing through GPU devices to VMs, Bob’s team was wasting resources. GPU passthrough only supports one virtual machine at a time. They need to enable multiple VMs to access shared GPU resources.

vGPUs save the day

After studying multiple solutions, Bob’s team finally chose to use NVIDIA virtual GPUs (vGPU). The team was happily surprised when they learned that Ubuntu Server supports native host and guest drivers for NVIDIA vGPU, meaning they didn’t need to change all of their infrastructure.

They can now provision virtual GPU devices on-demand, without buying additional hardware. This allows them to allocate GPU resources on the fly to VMs. By doing so, not only do they increase efficiency by improving resource consumption, they also accelerate the way their developers work. No more costly wasted resources. The team gets the compute power they need, when they need it.

Bob’s team has been able to expand his project and added thousands of scenarios that can run in parallel in different virtual environments for reliability tests.

Your team and your resources alike can be agile

The events and characters depicted in this blog are fictitious. Any similarity to actual persons is most definitely coincidental. Still, if Bob’s situation resonates with you and your team, contact us to learn more about powering up your AD development with Ubuntu.

Curious about automotive at Canonical? Check out our webpage.

Talk to us today

Interested in running Ubuntu in your organisation?

Newsletter signup

Related posts

From cloud to dashboard: experience the future of infotainment development at CES 2026

This year, we’re excited to show a demo that combines the strengths of both Anbox Cloud and Rightware’s Kanzi, the industry-leading software for creating...

Using ISO/SAE 21434 to stay ahead of the Cyber Resilience Act

How ISO/SAE 21434 helps you get ready for the Cyber Resilience Act If you work in automotive, you’ve probably already heard of the CRA – the EU’s Cyber...

Raising the bar for automotive cybersecurity in open source – Canonical’s ISO/SAE 21434 certification

Cybersecurity in the automotive world isn’t just a best practice anymore – it’s a regulatory imperative. With vehicles becoming software-defined platforms,...